Difference between revisions of "Educational Data Mining"

(→Learner Effort-Outcomes Paradigm (Leopard)) |

|||

| Line 31: | Line 31: | ||

== Publications == | == Publications == | ||

| + | * Guerra, J., Sahebi, S., Lin, Y.-R., and Brusilovsky, P. (2014) The Problem Solving Genome: Analyzing Sequential Patterns of Student Work with Parameterized Exercises. In: J. Stamper, Z. Pardos, M. Mavrikis and B. M. McLaren (eds.) Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014), London, UK, July 4-7, 2014, pp. 153-160 ([http://www.slideshare.net/huangyun/guerra-the-problemsolvinggenome presentation][http://educationaldatamining.org/EDM2014/uploads/procs2014/long%20papers/153_EDM-2014-Full.pdf paper]) | ||

* Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Parameterized Exercises in Java Programming: Using Knowledge Structure for Performance Prediction. In: Proceedings of The second Workshop on AI-supported Education for Computer Science (AIEDCS) at 12th International Conference on Intelligent Tutoring Systems ITS 2014, Honolulu, Hawaii, June 6 2014. ([http://d-scholarship.pitt.edu/21915/ paper])([http://www.slideshare.net/chagh/parameterized-exercises-in-java-programming-using-knowledge-structure-for-performance-prediction presentation]) | * Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Parameterized Exercises in Java Programming: Using Knowledge Structure for Performance Prediction. In: Proceedings of The second Workshop on AI-supported Education for Computer Science (AIEDCS) at 12th International Conference on Intelligent Tutoring Systems ITS 2014, Honolulu, Hawaii, June 6 2014. ([http://d-scholarship.pitt.edu/21915/ paper])([http://www.slideshare.net/chagh/parameterized-exercises-in-java-programming-using-knowledge-structure-for-performance-prediction presentation]) | ||

* Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Predicting Student Performance in Solving Parameterized Exercises. In: S. Trausan-Matu, K. Boyer, M. Crosby and K. Panourgia (eds.) Proceedings of 12th International Conference on Intelligent Tutoring Systems (ITS 2014), Honolulu, HI, USA, June 5-9, 2014, Springer International Publishing, pp. 496-503, ([http://d-scholarship.pitt.edu/21916/ paper]) ([http://www.slideshare.net/chagh/its14-pitttemplate presentation]) | * Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Predicting Student Performance in Solving Parameterized Exercises. In: S. Trausan-Matu, K. Boyer, M. Crosby and K. Panourgia (eds.) Proceedings of 12th International Conference on Intelligent Tutoring Systems (ITS 2014), Honolulu, HI, USA, June 5-9, 2014, Springer International Publishing, pp. 496-503, ([http://d-scholarship.pitt.edu/21916/ paper]) ([http://www.slideshare.net/chagh/its14-pitttemplate presentation]) | ||

| − | * | + | * Gonzalez-Brenes, J. P., Huang, Y. (2014) The White Method: Towards Automatic Evaluation Metrics for Adaptive Tutoring Systems. In: Proceedings of NIPS 2014 Workshop on Human Propelled Machine Learning, Montreal, Canada, December 13, 2014 ([http://d-scholarship.pitt.edu/26061/ paper]) |

| + | * Huang, Y., González-Brenes, J. P., Kumar, R., Brusilovsky, P. (2015) A Framework for Multifaceted Evaluation of Student Models. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 203-210. ([http://www.educationaldatamining.org/EDM2015/uploads/papers/paper_164.pdf paper]) ([http://www.slideshare.net/huangyun/2015edm-a-framework-for-multifaceted-evaluation-of-student-models-polygon presentation]) | ||

| + | * Huang, Y., González-Brenes, J. P., Brusilovsky, P. (2015) Challenges of Using Observational Data to Determine the Importance of Example Usage. In: Proceedings of the 17th International Conference on Artificial Intelligence in Education (AIED 2015), Madrid, Spain, pp. 633-637. ([http://d-scholarship.pitt.edu/26056/ paper]) | ||

| + | * Gonzalez-Brenes, J. P., Huang, Y. (2015) Your model is predictive— but is it useful? Theoretical and Empirical Considerations of a New Paradigm for Adaptive Tutoring Evaluation. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 187-194. ([https://www.researchgate.net/publication/280805929_The_Value_of_Social_Comparing_Open_Student_Modeling_and_Open_Social_Student_Modeling paper] [http://www.slideshare.net/pbrusilovsky/umap2015-mg presentation]) | ||

Revision as of 03:37, 5 April 2016

Mastery Grids aims to provide better experience to both students and instructors by using automatic tools and techniques that are derived by machine learning and data mining algorithms on its data. In this section, we introduce some of the projects performed on Mastery Grids' data for this purpose.

Contents

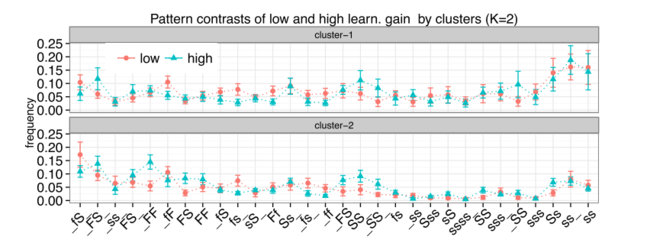

Problem Solving Genome for Students

In this research we study the students' behavioral patterns in attempting quizzes and repeating them. We look at attempt sequence of students, model, and examine patterns of student behavior with parameterized exercises. Starting with micro-patterns (genes) that describe small chunks of repetitive behavior of students, we construct individual student profiles (genomes) as frequency profiles. These profiles show the dominance of each gene (repetitive pattern) in individual behavior. We cluster the students using these profiles and study their learning gains in relation to their constructed genome. The exploration of student genomes revealed the individual genome is considerably stable, distinguishing students from their peers. It uniquely identifies a user among other users over the whole duration of the course despite a considerable growth of student knowledge over the course duration. While the problem complexity does affect the behavior patterns as well, we demonstrated that the genome is defined by some inherent characteristics of the user rather than a difficulty profile of the problems she solves. In the group level, all students can be most reliably split into just two cohorts that differ considerably by their behavior. After that split, we are able to contrast successful and less successful learners by their behavior and identify “beneficial” and “harmful” genes for each cohort. In particular, it is interesting to observe that the behavior of successful learners in one cohort is somewhat closer to the behavior of the opposite cohort.

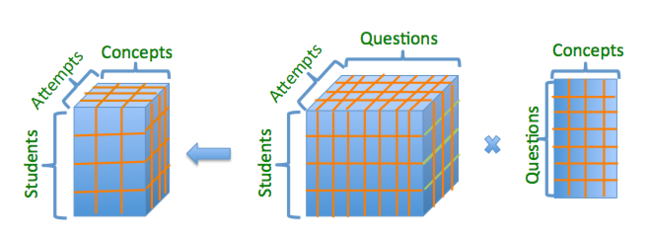

Tensor and Matrix Factorization in Predicting Student Performance

This research aims to predict success and failure of students in future questions using collaborative-filtering approaches including tensor and matrix factorizations. One of the benefits of these models is their ability to estimate the underlying skills of questions automatically. In this work, we research on these approaches on educational data in two settings: considering the attempt (time) sequence of students and considering the underlying concept structure of questions. We compare these approaches with state of the art methods such as Feature-Aware Student knowledge Tracing (FAST), Bayesian Knowledge Tracing, and Performance Factor Analysis.

Feedback-Driven Tensor Factorization or Simultaneously Modeling Student Knowledge and Course Content

Although the tasks of domain modeling, predicting student performance, and student knowledge modeling are interrelated, these problems are usually addressed separately in the literature of educational data mining. Also, the traditional tensor and matrix factorization algorithms, that can extract the domain model while predicting student performance, are not designed for educational purposes: these models ignore the fact that students' knowledge in each step is related to her knowledge in previous steps and the concepts she is practicing while taking current learning resources. In this work, we propose a tensor factorization algorithm that can simultaneously perform all of the aforementioned tasks, while imposing a constraint to increase the knowledge of students using the feedback it receives from the current learning resources.

Multifaceted Evaluation of Student Models for Practitioners

We have proposed two state-of-the-art frameworks for evaluating student models in a data-driven manner for practitioners: the Polygon framework, and the Learner Effort-Outcomes Paradigm.

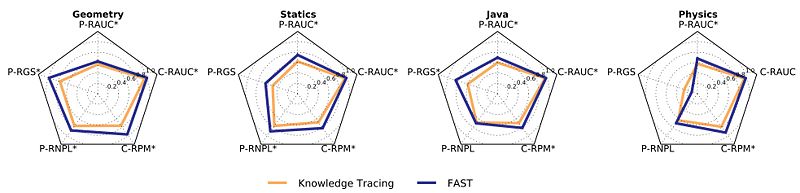

Polygon framework

Latent variable models, such as the popular Knowledge Tracing method, are often used to enable adaptive tutoring systems to personalize education. However, finding optimal model parameters is usually a difficult non-convex optimization problem when considering latent variable models. Prior work has reported that latent variable models obtained from educational data vary in their predictive performance, plausibility, and consistency. Unfortunately, there are still no unified quantitative measurements of these properties. This paper suggests a general unified framework (that we call Polygon) for multifaceted evaluation of student models. The framework takes all three dimensions mentioned above into consideration and offers novel metrics for the quantitative comparison of different student models. These properties affect the effectiveness of the tutoring experience in a way that traditional predictive performance metrics fall short. The present work demonstrates our methodology of comparing Knowledge Tracing with a recent model called Feature-Aware Student knowledge Tracing (FAST) on datasets from different tutoring systems. Our analysis suggests that FAST generally improves on Knowledge Tracing along all dimensions studied.

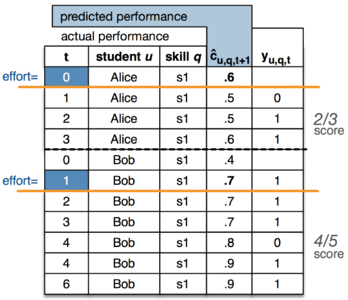

Learner Effort-Outcomes Paradigm (Leopard)

Classification evaluation metrics are often used to evaluate adaptive tutoring systems— programs that teach and adapt to humans. Unfortunately, it is not clear how intuitive these metrics are for practitioners with little machine learning background. Moreover, our experiments suggest that existing convention for evaluating tutoring systems may lead to suboptimal decisions. We propose the Learner Effort-Outcomes Paradigm (Leopard), a new framework to evaluate adaptive tutoring. We introduce Teal and White, novel automatic metrics that apply Leopard and quantify the amount of effort required to achieve a learning outcome. Our experiments suggest that our metrics are a better alternative for evaluating adaptive tutoring.

Publications

- Guerra, J., Sahebi, S., Lin, Y.-R., and Brusilovsky, P. (2014) The Problem Solving Genome: Analyzing Sequential Patterns of Student Work with Parameterized Exercises. In: J. Stamper, Z. Pardos, M. Mavrikis and B. M. McLaren (eds.) Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014), London, UK, July 4-7, 2014, pp. 153-160 (presentationpaper)

- Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Parameterized Exercises in Java Programming: Using Knowledge Structure for Performance Prediction. In: Proceedings of The second Workshop on AI-supported Education for Computer Science (AIEDCS) at 12th International Conference on Intelligent Tutoring Systems ITS 2014, Honolulu, Hawaii, June 6 2014. (paper)(presentation)

- Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Predicting Student Performance in Solving Parameterized Exercises. In: S. Trausan-Matu, K. Boyer, M. Crosby and K. Panourgia (eds.) Proceedings of 12th International Conference on Intelligent Tutoring Systems (ITS 2014), Honolulu, HI, USA, June 5-9, 2014, Springer International Publishing, pp. 496-503, (paper) (presentation)

- Gonzalez-Brenes, J. P., Huang, Y. (2014) The White Method: Towards Automatic Evaluation Metrics for Adaptive Tutoring Systems. In: Proceedings of NIPS 2014 Workshop on Human Propelled Machine Learning, Montreal, Canada, December 13, 2014 (paper)

- Huang, Y., González-Brenes, J. P., Kumar, R., Brusilovsky, P. (2015) A Framework for Multifaceted Evaluation of Student Models. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 203-210. (paper) (presentation)

- Huang, Y., González-Brenes, J. P., Brusilovsky, P. (2015) Challenges of Using Observational Data to Determine the Importance of Example Usage. In: Proceedings of the 17th International Conference on Artificial Intelligence in Education (AIED 2015), Madrid, Spain, pp. 633-637. (paper)

- Gonzalez-Brenes, J. P., Huang, Y. (2015) Your model is predictive— but is it useful? Theoretical and Empirical Considerations of a New Paradigm for Adaptive Tutoring Evaluation. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 187-194. (paper presentation)