Difference between revisions of "Learner Modeling"

(→asymptotic assessment of user knowledge) |

|||

| Line 13: | Line 13: | ||

== asymptotic assessment of user knowledge == | == asymptotic assessment of user knowledge == | ||

| − | + | Asymptotic knowledge assessment is CUMULATE's legacy user modeling algorithm for computing user knowledge with respect to problem-solving. [[CUMULATE asymptotic knowledge assessment|==> more]] | |

== Feature-Aware Student knowledge Tracing (FAST) == | == Feature-Aware Student knowledge Tracing (FAST) == | ||

Revision as of 04:09, 5 April 2016

Contents

CUMULATE

Introduction

CUMULATE (Centralized User Modeling Architecture for TEaching) is a central user modeling server designed to provide user modeling functionality to a student-adaptive educational system. It collects evidence (events) about student learning from multiple servers that interact with the student. It stores students' activities and infers their learning characteristics, which are the basis for an individual adaptation to them. ... External and internal inference agents process the flow of events and update the values in the inference model of the server. Each inference agent is responsible for maintaining a specific property in the inference model, such as the current motivation level of the student or the student's current level of knowledge for each course topic. ==> more

Publications

- Zadorozhny, V., Yudelson, M., and Brusilovsky, P. (2008) A Framework for Performance Evaluation of User Modeling Servers for Web Applications. Web Intelligence and Agent Systems 6(2), 175-191. DOI

- Yudelson, M., Brusilovsky, P., and Zadorozhny, V. (2007) A user modeling server for contemporary adaptive hypermedia: An evaluation of the push approach to evidence propagation. In Conati, C., McCoy, K. F., and Paliouras, G. Eds., User Modeling, volume 4511 of Lecture Notes in Computer Science, pp 27-36. Springer, 2007. PDF DOI

- Brusilovsky, P., Sosnovsky, S. A., and Shcherbinina, O. (2005). User Modeling in a Distributed E-Learning Architecture. Paper presented at the 10th International Conference on User Modeling (UM 2005), Edinburgh, Scotland, UK, July 24-29, 2005. PDF DOI

asymptotic assessment of user knowledge

Asymptotic knowledge assessment is CUMULATE's legacy user modeling algorithm for computing user knowledge with respect to problem-solving. ==> more

Feature-Aware Student knowledge Tracing (FAST)

Introduction

Feature-Aware Student knowledge Tracing (FAST) is a novel, efficient student model created by PAWs lab and Pearson with the state-of-the-art predictive performance. It allows general, flexible features into Knowledge Tracing, which is the most popular student model. For the first time it unifies existed specially designed student models based on Knowledge Tracing framework with integrated advantages. We demonstrate FAST’s flexibility with examples of feature sets that are relevant to a wide audience: we haved uses features in FAST to model (i) multiple subskills, (ii) temporal Item Response Theory, and (iii) expert knowledge. Compared with Knowledge Tracing, (1) it improves up to 25% in classification performance, (2) it generates more interpretable, consistent parameters, and (3) it is 300 times faster. In a follow-up study, we compared FAST to the best paper model (a single-purpose model) of the same year with favorable results while FAST is designed as a general-purpose model. The main paper was nominated for the Best Paper Award in a top-tier conference in 2014. Both the main and the follow-up papers were cited by top researchers in the field from Carnegie Mellon University, Stanford, Cornell, ETH Zurich (etc.) with in total 34 citations since 2014 (till 04/2016). ==> more

Publications

- Gonzalez-Brenes, J. P., Huang, Y., and Brusilovsky, P. (2013) FAST: Feature-Aware Student Knowledge Tracing. In: Proceedings of NIPS 2013 Workshop on Data Driven Education, Lake Tahoe, NV, December 10, 2013, (paper)

- González-Brenes, J. P., Huang, Y., and Brusilovsky, P. (2014) General Features in Knowledge Tracing to Model Multiple Subskills, Temporal Item Response Theory, and Expert Knowledge. In: J. Stamper, Z. Pardos, M. Mavrikis and B. M. McLaren (eds.) Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014), London, UK, July 4-7, 2014, pp. 84-91. (First two authors contributed equally. Nominated for Best Paper Award) (presentationpapertutorial code)

- Khajah, M. M., Huang, Y., González-Brenes, J. P., Mozer, M. C., and Brusilovsky, P. (2014) Integrating Knowledge Tracing and Item Response Theory: A Tale of Two Frameworks. In: I. Cantador, M. Chi, R. Farzan and R. Jäschke (eds.) Proceedings of Workshop on Personalization Approaches in Learning Environments (PALE 2014) at the 22th International Conference on User Modeling, Adaptation, and Personalization, UMAP 2014, Aalborg, Denmark, July 11, 2014, CEUR, pp. 7-12. (First three authors contributed equally) (presentationpaper).

- Sahebi, S., Huang, Y., and Brusilovsky, P. (2014) Parameterized Exercises in Java Programming: Using Knowledge Structure for Performance Prediction. In: Proceedings of The second Workshop on AI-supported Education for Computer Science (AIEDCS) at 12th International Conference on Intelligent Tutoring Systems ITS 2014, Honolulu, Hawaii, June 6 2014. (paper)(presentation)

- Huang, Y., González-Brenes, J. P., and Brusilovsky, P. (2015) The FAST toolkit for Unsupervised Learning of HMMs with Features. In: The Machine Learning Open Source Software workshop at the 32nd International Conference on Machine Learning (ICML-MLOSS 2015), Lille, France July 10, 2015. (papercode)

- Huang, Y., González-Brenes, J. P., Kumar, R., Brusilovsky, P. (2015) A Framework for Multifaceted Evaluation of Student Models. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 203-210. (paper) (presentation)

Content Model Reduction for Better Learner Model

Introduction

When modeling student knowledge and predicting student performance, adaptive educational systems frequently rely on content models that connect learning content (i.e., problems) with its underlying domain knowledge (i.e., knowledge components, KCs) required to complete it. In some domains, such as programming, the number of KCs associated with advanced learning contents is quite large. It complicates modeling due to increasing noise and decreases efficiency. We argue that the efficiency of modeling and prediction in such domains could be improved without the loss of quality by reducing problems content models to a subset of most important KCs. To prove this hypothesis, we evaluate several KC reduction methods varying reduction size by assessing the prediction performance of Knowledge Tracing and Performance Factor Analysis. The results show that the predictive performance using reduced content models can be significantly better than using original one, with extra benefits of reducing time and space.

Publications

- Huang, Y., Xu, Y., and Brusilovsky, P. (2014) Doing More with Less: Student Modeling and Performance Prediction with Reduced Content Models. In: V. Dimitrova, et al. (eds.) Proceedings of 22nd Conference on User Modeling, Adaptation and Personalization (UMAP 2014), Aalborg, Denmark, July 7-11, 2014, Springer Verlag, pp. 338-349. (presentationpaper)

Multifaceted Evaluation of Learner Models for Practitioners

We have proposed two state-of-the-art frameworks for evaluating student models in a data-driven manner for practitioners: the Polygon framework, and the Learner Effort-Outcomes Paradigm. We also explored challenges of using observational data to answer research question such as determine the importance of example usage.

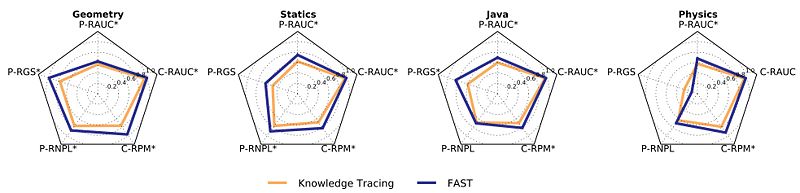

Polygon framework

Latent variable models, such as the popular Knowledge Tracing method, are often used to enable adaptive tutoring systems to personalize education. However, finding optimal model parameters is usually a difficult non-convex optimization problem when considering latent variable models. Prior work has reported that latent variable models obtained from educational data vary in their predictive performance, plausibility, and consistency. Unfortunately, there are still no unified quantitative measurements of these properties. This paper suggests a general unified framework (that we call Polygon) for multifaceted evaluation of student models. The framework takes all three dimensions mentioned above into consideration and offers novel metrics for the quantitative comparison of different student models. These properties affect the effectiveness of the tutoring experience in a way that traditional predictive performance metrics fall short. The present work demonstrates our methodology of comparing Knowledge Tracing with a recent model called Feature-Aware Student knowledge Tracing (FAST) on datasets from different tutoring systems. Our analysis suggests that FAST generally improves on Knowledge Tracing along all dimensions studied.

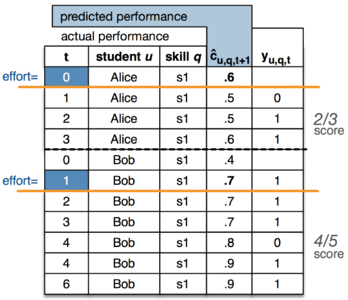

Learner Effort-Outcomes Paradigm (Leopard)

Classification evaluation metrics are often used to evaluate adaptive tutoring systems— programs that teach and adapt to humans. Unfortunately, it is not clear how intuitive these metrics are for practitioners with little machine learning background. Moreover, our experiments suggest that existing convention for evaluating tutoring systems may lead to suboptimal decisions. We propose the Learner Effort-Outcomes Paradigm (Leopard), a new framework to evaluate adaptive tutoring. We introduce Teal and White, novel automatic metrics that apply Leopard and quantify the amount of effort required to achieve a learning outcome. Our experiments suggest that our metrics are a better alternative for evaluating adaptive tutoring.

Publications

- Huang, Y., González-Brenes, J. P., Kumar, R., Brusilovsky, P. (2015) A Framework for Multifaceted Evaluation of Student Models. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 203-210. (paper) (presentation)

- Huang, Y., González-Brenes, J. P., Brusilovsky, P. (2015) Challenges of Using Observational Data to Determine the Importance of Example Usage. In: Proceedings of the 17th International Conference on Artificial Intelligence in Education (AIED 2015), Madrid, Spain, pp. 633-637. (paper)

- Gonzalez-Brenes, J. P., Huang, Y. (2014) The White Method: Towards Automatic Evaluation Metrics for Adaptive Tutoring Systems. In: Proceedings of NIPS 2014 Workshop on Human Propelled Machine Learning, Montreal, Canada, December 13, 2014 (paper)

- Gonzalez-Brenes, J. P., Huang, Y. (2015) Your model is predictive— but is it useful? Theoretical and Empirical Considerations of a New Paradigm for Adaptive Tutoring Evaluation. In: Proceedings of the 8th International Conference on Educational Data Mining (EDM 2015), Madrid, Spain, pp. 187-194. (paper presentation)

- Gonzalez-Brenes, J. P., Huang, Y. The Leopard Framework: Towards understanding educational technology interventions with a Pareto Efficiency Perspective. In: The ICML 2015 Workshop on Machine Learning for Education (ICML 2015), Lille, France, 2015. (paper)

- Gonzalez-Brenes, J. P., Huang, Y. Using Data from Real and Simulated Learners to Evaluate Adaptive Tutoring Systems. In: 2nd AIED Workshop on Simulated Learners at the 17th Intl. Conf. on Artificial Intelligence in Education (AIED 2015), Madrid, Spain, 2015. (paper)