CUMULATE user and domain adaptive user modeling

| This page is under construction. More content will be added soon |

This stream of work is aimed at improving CUMULATE's legacy one-fits-all algorithm for modeling user's problem-solving activity and creating a context-sensitive user modeling algorithm adaptable/adaptive to individual users' cognitive abilities as well as to individual problem complexities.

A new parametrized user modeling algorithm has been devised. A set of studies is set up to evaluate the new algorithm as well as its adaptability/adaptivity.

Contents

Study 1

This study involves retrospective comparative evaluation of the CUMULATE's legacy and parametrized user modeling algorithms. The evaluation is done using usage logs collected from 6 Database Management courses offered during Fall 2007 and Spring 2008 semesters at the University of Pittsburgh, National College of Ireland, and Dublin City University. Each course had roughly the same structure and an identical set of problems served by SQLKnoT system.

Scenario

We were comparing legacy CUMULATE algorithm and 3 versions of parametrized algorithm. The versions differed in the parameters used for user modeling.

- First, was an attempt to shadow the legacy algorithm by guessing the best parameters for modeling, without discriminating individual user and problem differences.

- The second version, did not discriminate users/problems as well. However, the parameters were obtained by fitting one global user parameter and one global problem parameter signature and then using them for the modeling.

- The third version of the parametrized algorithm worked with a set of user specific parameters and problem specific parameter signatures for the modeling.

Procedures

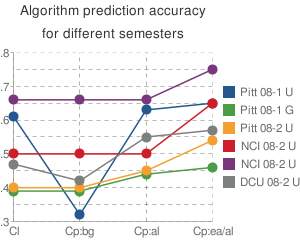

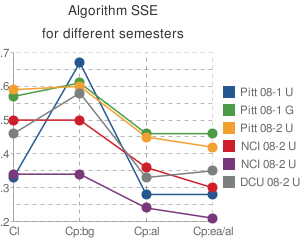

Accuracy and SSE (sum of squared error) were used as the metrics of comparison. They were computed overall for each of the 6 semester logs and 4 versions of algorithms.

In the case of legacy CUMULATE and parametrized CUMULATE-best-guess algorithms, the data was taken as it. The globally parametrized, and individually paramatrized CUMULATE algorithms were supplied with the pre-fit global/individual user/problem-specific parameters. The data of only one of the early courses was used to obtain the parameters. Data of all 6 was used to compute parametrized models. Refer to the table below for details and basic log statistics statistics.

| Semester | School | Level | Procedures | Users | Datapoints | Attempts per user | Problems per user |

|---|---|---|---|---|---|---|---|

| Fall'07 | Pitt | Und. | Obtain global/individual user/problem-specific parameters Compute CL, Cp:bg, Cp:glob, Cp:ind |

27 | 4224 | 156.44 | 29.96 |

| Fall'07 | Pitt | Grad. | Compute CL, Cp:bg, Cp:glob, Cp:ind/glob | 20 | 1233 | 61.65 | 29.95 |

| Spring'08 | Pitt | Und. | Compute CL, Cp:bg, Cp:glob, Cp:ind/glob | 15 | 458 | 26.94 | 16.35 |

| Spring'08 | NCI | Und. | Compute CL, Cp:bg, Cp:glob, Cp:ind/glob | 17 | 216 | 12.71 | 6.59 |

| Spring'08 | NCI | Und. | Compute CL, Cp:bg, Cp:glob, Cp:ind/glob | 18 | 142 | 7.89 | 4.00 |

| Spring'08 | DCU | Und. | Compute CL, Cp:bg, Cp:glob, Cp:ind/glob | 52 | 4574 | 81.68 | 22.82 |

CL - legacy CUULATE's algorithm, Cp:bg - parametrized CUMULATE's algorithm with guessed global parameters, Cp:glob- parametrized CUMULATE's algorithm fit global user/problem parameters, Cp:ind - parametrized CUMULATE's algorithm with individual user/problem parameters, Cp:ind/glob - parametrized CUMULATE's algorithm with individual problem parameters, and global user parameter

Results

As we can see from the figures below, neither guessed not globally-fit parameters in a new parametrized algorithm give it an edge. Individual problem-specific parameters, however, do give it an advantage. In majority of the cases this advantage is significant (with exception to the only graduate level course offered at Pitt in Fall 2007 semester)

Publication

The results were presented during the i-fest 2009 poster competition at the School of Information Sciences, University of Pittsburgh and got the second place in the PhD track. Poster can be accessed here.

Critique

References

To papers To dataset To the code