Learner Modeling

Contents

CUMULATE

asymptotic assessment of user knowledge

Feature-Aware Student knowledge Tracing (FAST)

Content Model Reduction for Better Learner Model

When modeling student knowledge and predicting student performance, adaptive educational systems frequently rely on content models that connect learning content (i.e., problems) with its underlying domain knowledge (i.e., knowledge components, KCs) required to complete it. In some domains, such as programming, the number of KCs associated with advanced learning contents is quite large. It complicates modeling due to increasing noise and decreases efficiency. We argue that the efficiency of modeling and prediction in such domains could be improved without the loss of quality by reducing problems content models to a subset of most important KCs. To prove this hypothesis, we evaluate several KC reduction methods varying reduction size by assessing the prediction performance of Knowledge Tracing and Performance Factor Analysis. The results show that the predictive performance using reduced content models can be significantly better than using original one, with extra benefits of reducing time and space.

Publications

Multifaceted Evaluation of Learner Models for Practitioners

We have proposed two state-of-the-art frameworks for evaluating student models in a data-driven manner for practitioners: the Polygon framework, and the Learner Effort-Outcomes Paradigm. We also explored challenges of using observational data to answer research question such as determine the importance of example usage.

Polygon framework

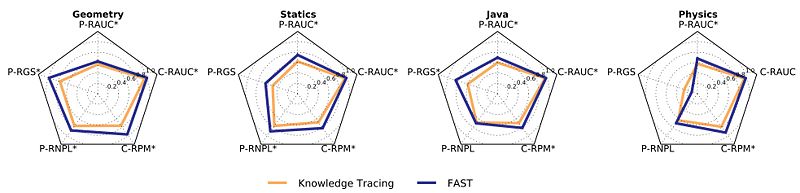

Latent variable models, such as the popular Knowledge Tracing method, are often used to enable adaptive tutoring systems to personalize education. However, finding optimal model parameters is usually a difficult non-convex optimization problem when considering latent variable models. Prior work has reported that latent variable models obtained from educational data vary in their predictive performance, plausibility, and consistency. Unfortunately, there are still no unified quantitative measurements of these properties. This paper suggests a general unified framework (that we call Polygon) for multifaceted evaluation of student models. The framework takes all three dimensions mentioned above into consideration and offers novel metrics for the quantitative comparison of different student models. These properties affect the effectiveness of the tutoring experience in a way that traditional predictive performance metrics fall short. The present work demonstrates our methodology of comparing Knowledge Tracing with a recent model called Feature-Aware Student knowledge Tracing (FAST) on datasets from different tutoring systems. Our analysis suggests that FAST generally improves on Knowledge Tracing along all dimensions studied.

Learner Effort-Outcomes Paradigm (Leopard)

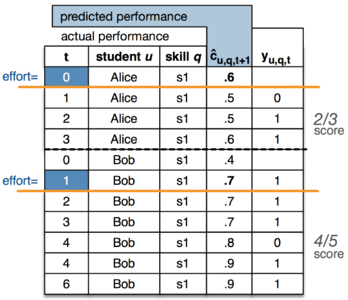

Classification evaluation metrics are often used to evaluate adaptive tutoring systems— programs that teach and adapt to humans. Unfortunately, it is not clear how intuitive these metrics are for practitioners with little machine learning background. Moreover, our experiments suggest that existing convention for evaluating tutoring systems may lead to suboptimal decisions. We propose the Learner Effort-Outcomes Paradigm (Leopard), a new framework to evaluate adaptive tutoring. We introduce Teal and White, novel automatic metrics that apply Leopard and quantify the amount of effort required to achieve a learning outcome. Our experiments suggest that our metrics are a better alternative for evaluating adaptive tutoring.